A.L.B.E.R.T. V1

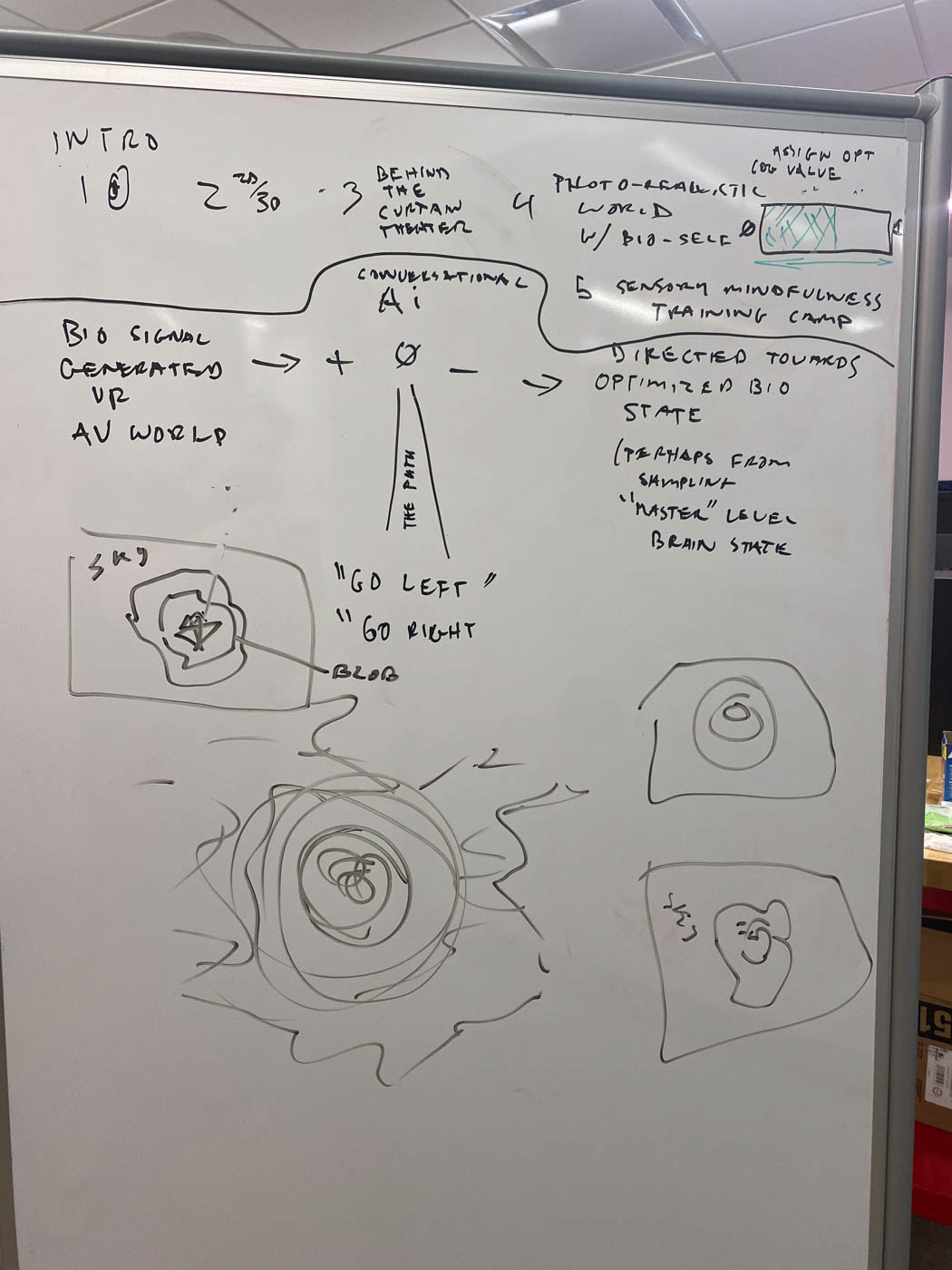

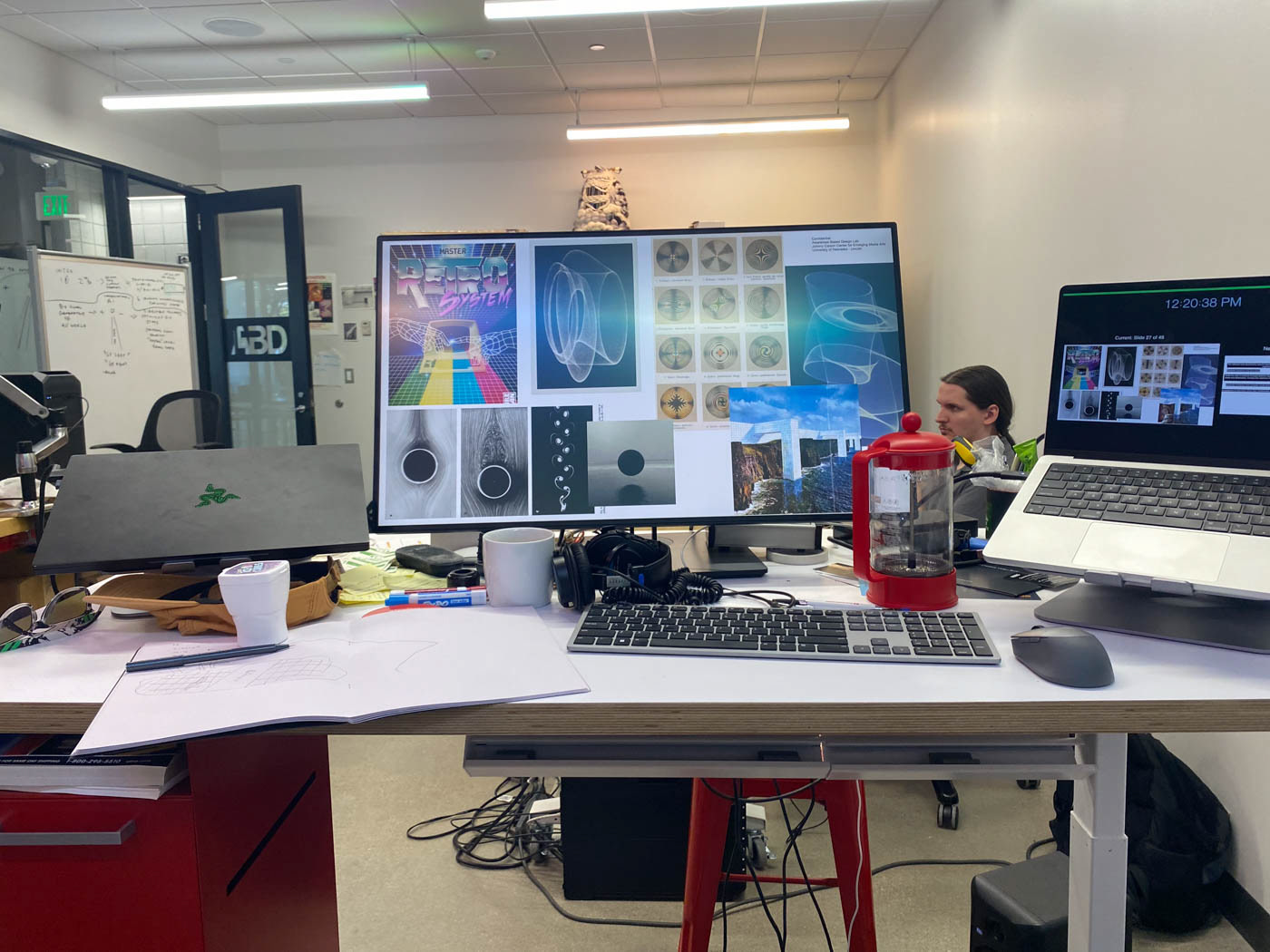

V2 in development as an installation with modified custom LLM and reclined immersion.

Concept drawing for upcoming installation with sub-frequency haptics, binaural audio with multiple horn oscillation, and real-time user eye movement on two low pixel LED screens.

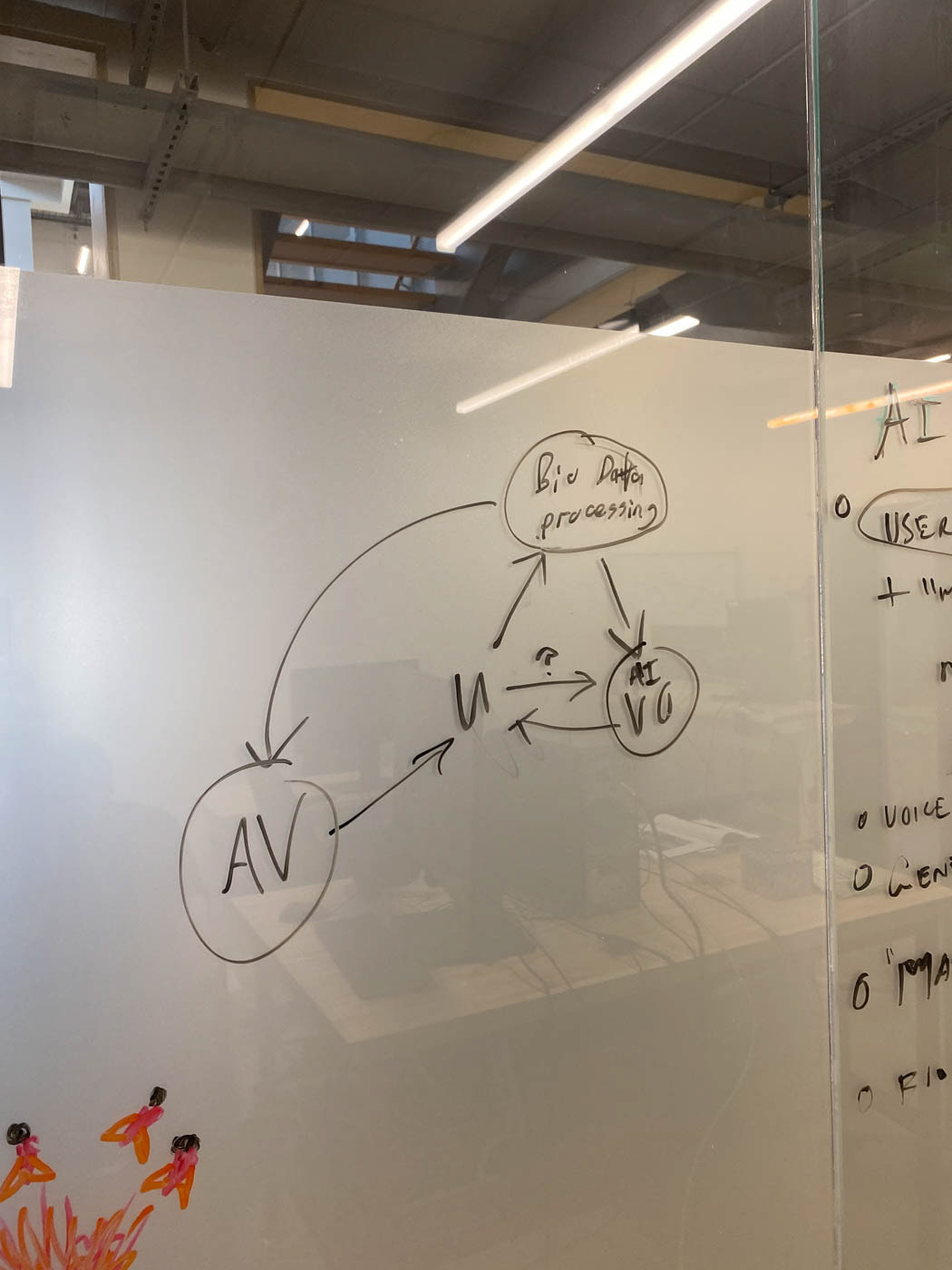

Ongoing experiments using in VR eye tracking camera feeds as a biofeedback mechanism

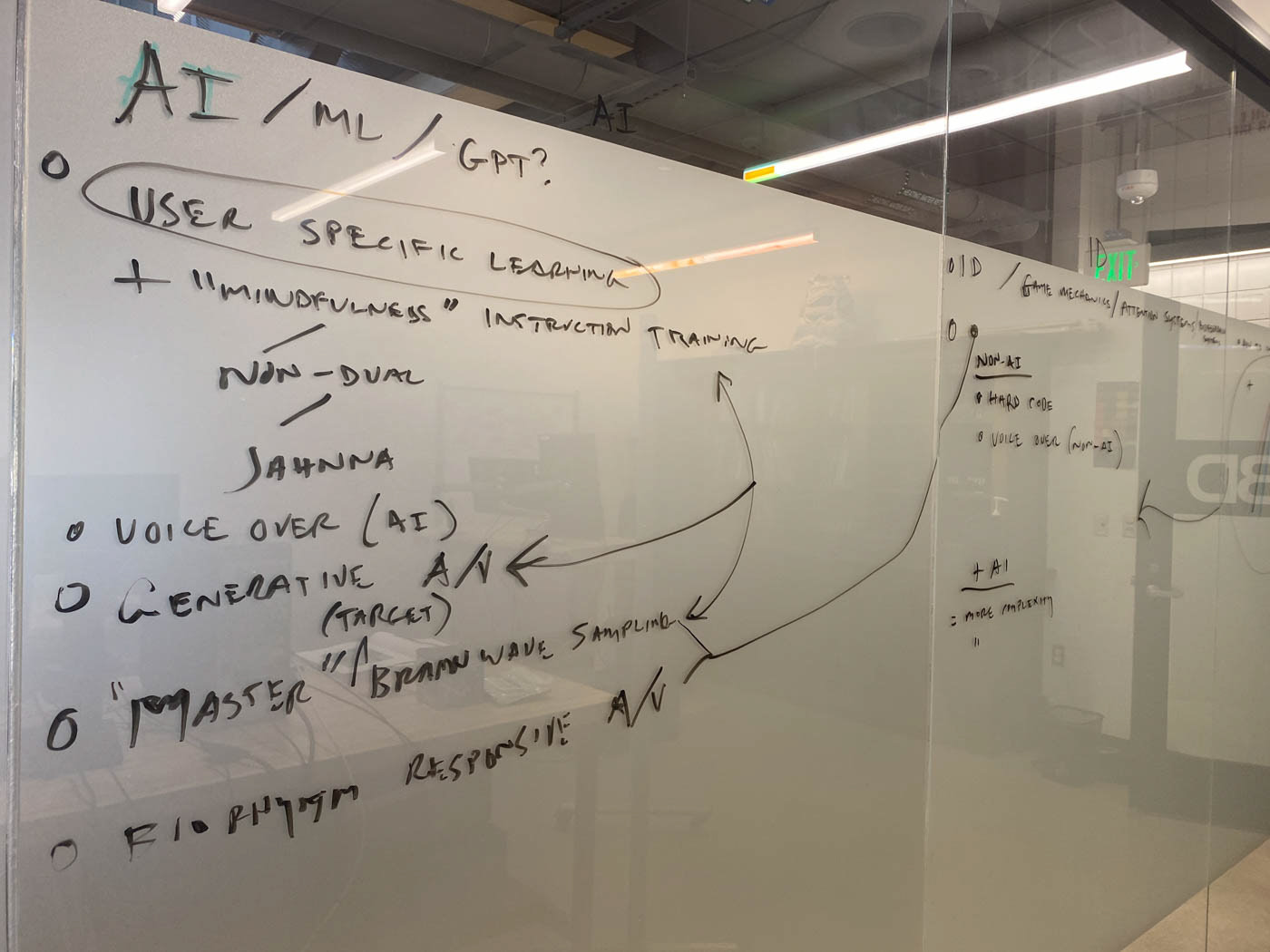

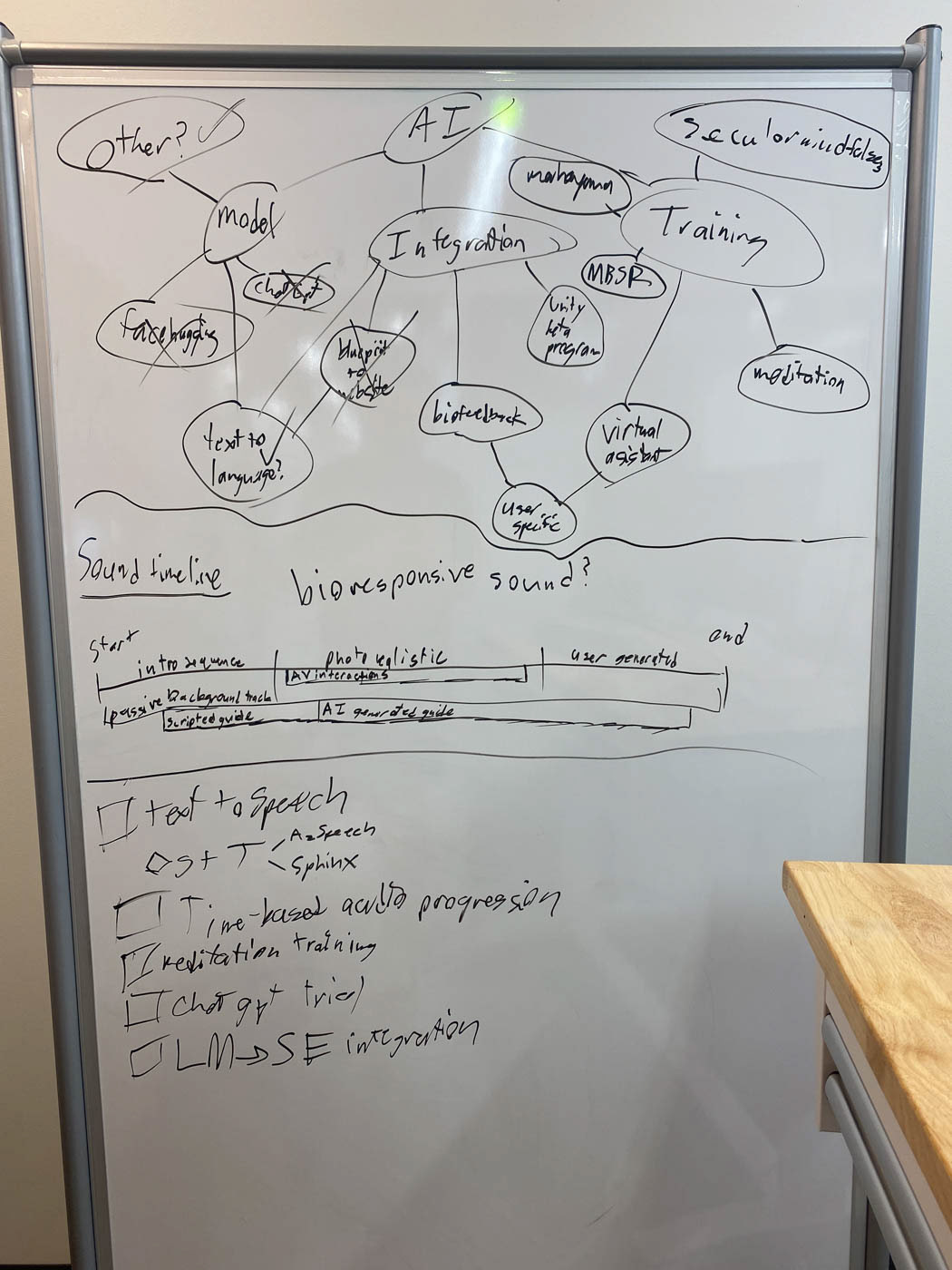

A.L.B.E.R.T. (the awareness lab's big enlightenment realization technology) is A sincere and cautionary exploration of a future of AI immersive biofeedback assisted learning. As a functioning prototype, It uses multi-modal bio-sensing and feedback, real-time algorithmic cognitive assessment, generative visual environments, and participant specific learning. The visual environment, UI, mental maps, and auditory feedback all reinforce user learning. However, the AI machine intelligence Guru and its human assisted training data are obfuscated behind its charming, friendly, personified nature. In this video, it is observable the interaction between A.L.B.E.R.T. and a participant. When their focus stays within what the algorithm has been coded to know as optimal, the fractal continues to expand in scale encompassing the user and furthering their immersion. When their attention wanes, the fractal contracts and moves further from the user’s field of view.

The experience is narrative, experiential, generative, and responsive to the participants biorhythms determining attention. The environment, UI, mental maps, and guided feedback provided by an artificial intelligence Large language model trained on secular mindfulness, all modify based on targets defined the lab and the hardware.

This emergent and advanced technology may be crude compared to the elegance of thousands of years of tradition, tools, and technique from qualified teachers. Yet the ambition and possibilities may be the same; help someone discover themselves, train their attention, and explore their ontology through instruction, interpretation, and feedback. this platform (and others emerging like it) UTILIZE A media environment WHICH is generating itself based on the internal and external states of the participant, thereby potentially illuminating unique for growth in the field of mindfulness or other contemplative modalities.

Team:

Jesse Reding Fleming: director / Lead

Shane Bolan: Technical Director + Game Engine Developer

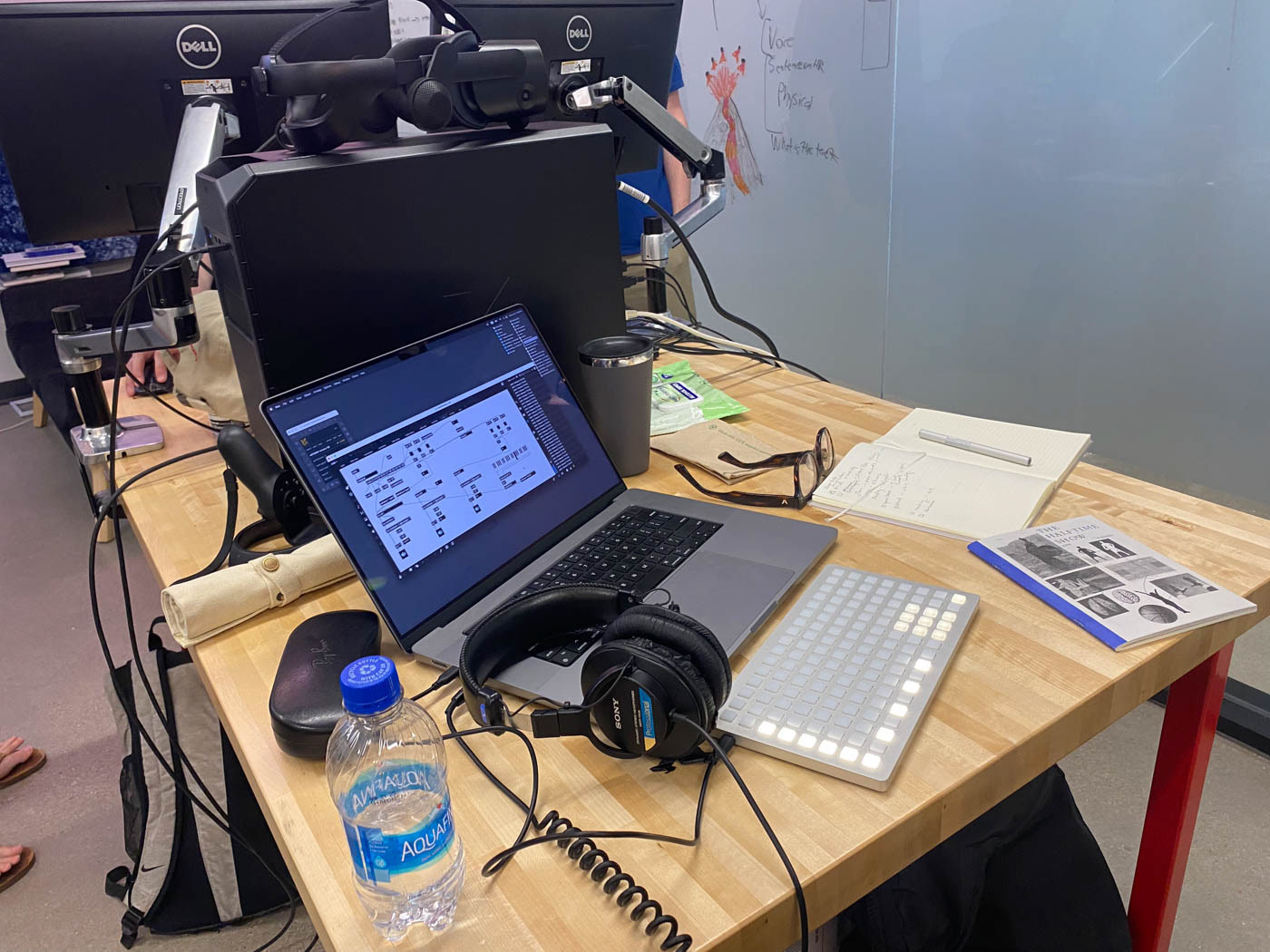

Trystan Nord: Game Engine Developer + sound design

Max Urbany: machine learning + artificial intelligence design and development

Jesse Reding Fleming: director / Lead

Shane Bolan: Technical Director + Game Engine Developer

Trystan Nord: Game Engine Developer + sound design

Max Urbany: machine learning + artificial intelligence design and development

Mohammad Rashedul Hasan: UNL Ai expert / Assistant Professor Electrical and COmputer engineering

Ahatsham Fnu: UNL Graduate Reseach Assistant Electrical and COmputer engineering

Jay Kreimer: Composer / Sound Design

Maital Neta: UNL cognitive scientist / Associate Professor of Psychology

Mike Dodd: UNL cognitive scientist / Professor of Psychology

HP Educause / HP Omnicept: Equipment Sponsor

Jay Kreimer: Composer / Sound Design

Maital Neta: UNL cognitive scientist / Associate Professor of Psychology

Mike Dodd: UNL cognitive scientist / Professor of Psychology

HP Educause / HP Omnicept: Equipment Sponsor

Funded by the university of Nebraska's Office of research and economic development (ORED) and the Awareness Lab (tAL)